Quantum breakthroughs: Google, Microsoft, and Amazon are racing to reshape the future

Google, Microsoft, and Amazon announced major quantum computing breakthroughs. They took different approaches to solving core challenges. Plus, what does this mean for AI ?

While we are overwhelmed by AI new on one side, is anyone else surprised by the amount of quantum computing related announcements off late ?

Google, Microsoft, and Amazon have all been announcing major hardware breakthroughs in the last few months. Here we look at

The differences in strategy, approach and technical innovations.

How they solved the technical challenges around error correction, scaling and decoherance.

What does it mean for AI in the long term?

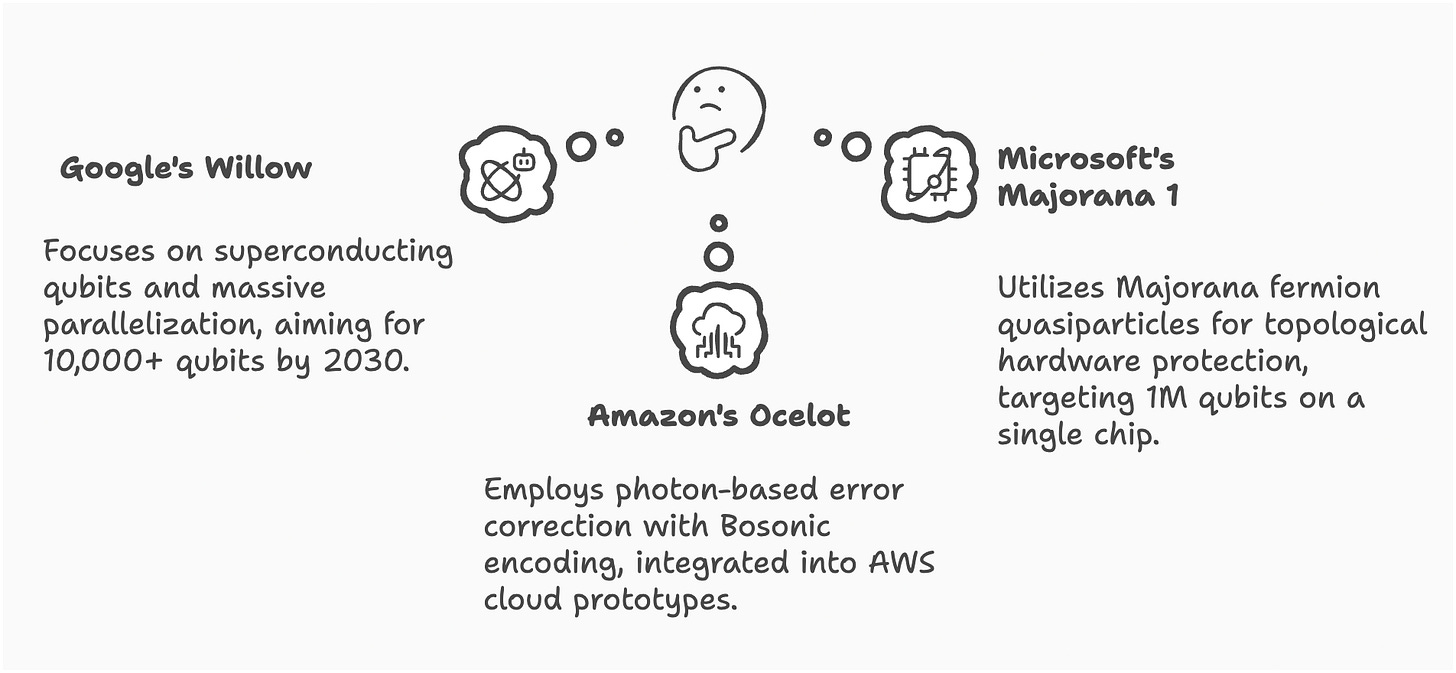

Google: Betting on Scale with the Willow Chip

Google was the first among the three to announce their the quantum breakthrough with the willow chip. Google’s Willow quantum processor uses superconducting qubits arranged in a Noisy Intermediate-Scale Quantum (NISQ) architecture. Their strategy focuses on brute-force scaling: packing thousands of error-prone qubits onto a chip and using advanced error-correction algorithms to compensate for noise.

Key innovations:

Breakthrough in Error Correction: Willow achieves exponential error reduction as qubits scale, a critical step toward fault-tolerant quantum computing.

Unprecedented Computational Speed: It performed a computation in under five minutes that would take the fastest supercomputers 10 septillion years.

Scalability with Logical Qubits: Willow groups physical qubits into logical qubits, improving error resilience as the system scales.

105-Qubit Capacity: With 105 qubits, Willow sets a benchmark for quantum systems, enabling complex simulations and optimization tasks.

Google claims this approach will give them the ability to solve real-world problems faster than classical computers by 2030.

Microsoft: Topological Qbits with Majorana 1

Microsoft’s Majorana 1 processor introduces topological qubits, a fundamentally new way to store quantum information. Instead of relying on superconducting circuits, Microsoft engineered a material that creates quasiparticles (called Majorana fermions) with built-in error resistance.

Key innovations:

Topological Qubits: Introduces qubits built on a new state of matter (topological superconductors), inherently resistant to errors, reducing the need for complex error correction.

Million-Qubit Scalability: Designed to fit up to one million qubits on a single chip, enabling practical, large-scale quantum computing.

Digital Control: Simplifies qubit manipulation with voltage pulses instead of fine-tuning, making operations more efficient and scalable.

Palm-Sized Architecture: Combines qubits and control electronics into a compact chip that can be deployed in Azure datacenters

Microsoft has a roadmap to 1-million-qubit on a single chip, backed by DARPA for defense applications. The Majorana 1 processor is being hailed as the transistor moment for quantum computing.

Amazon: Ocelot chip and bosonic error correction

Amazon’s Ocelot chip takes a different path with bosonic error correction. Instead of traditional qubits, Ocelot uses quantum states of photons in superconducting cavities.

Key innovations:

Cat Qubit Architecture: Utilizes “cat qubits” to suppress errors, reducing error correction costs by up to 90% compared to traditional methods.

Bosonic Error Correction: Implements a scalable, hardware-efficient bosonic error correction system, minimizing the number of physical qubits required for logical qubits.

Noise-Biased Gates: Introduces noise-biased quantum gates, enhancing hardware efficiency and stability for large-scale quantum computing.

Compact Design: Combines all error correction components within a single microchip, setting a new standard for compact and scalable quantum architectures

Ocelot shows Amazon’s focus on making quantum resources economically viable for mainstream AI workloads in the future.

3 Core Challenges and the different approaches to solving them

The fundamental challenges that Google, Microsoft, and Amazon are addressing in quantum computing are error correction, scalability, and decoherence. These issues are critical to making quantum computing practical and reliable for real-world applications, including AI.

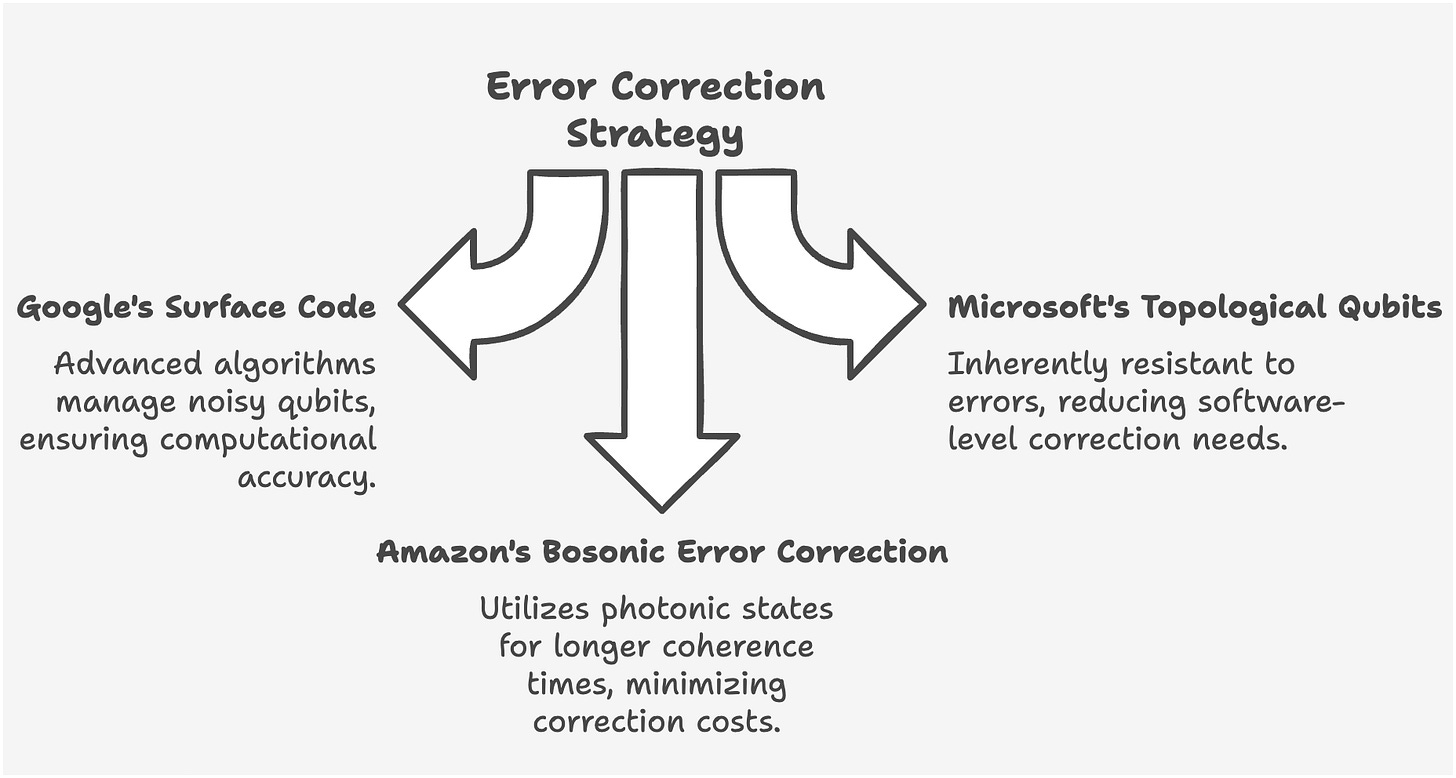

Error Correction

Quantum systems are highly sensitive to noise and environmental interference, which can lead to errors in computation. Unlike classical computers, quantum systems require specialized methods to detect and correct errors without collapsing the quantum state.

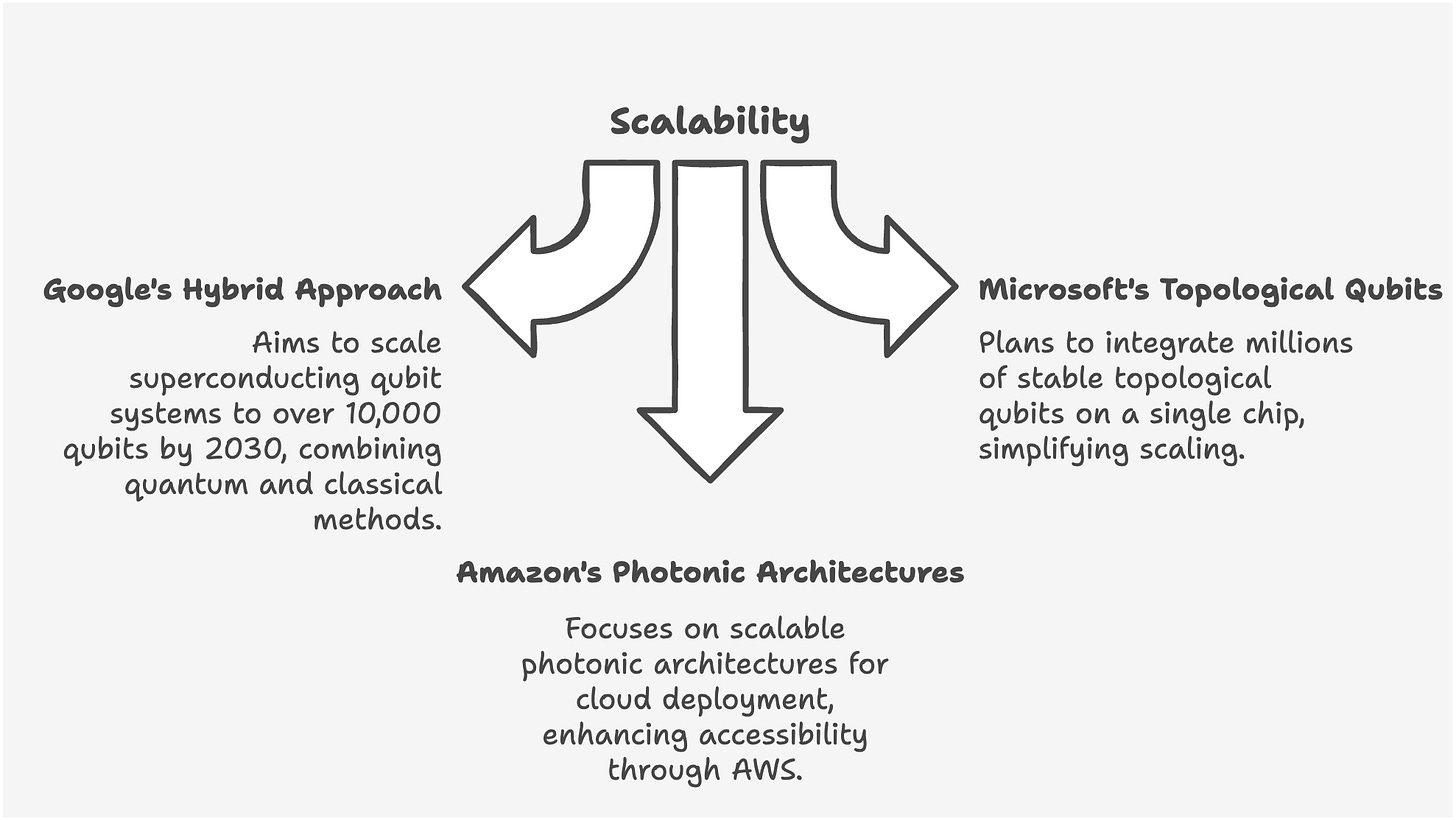

Scalability

Scaling up quantum computers to hundreds or thousands of qubits is essential for solving complex problems. However, as the number of qubits increases, maintaining coherence and minimizing errors becomes exponentially harder.

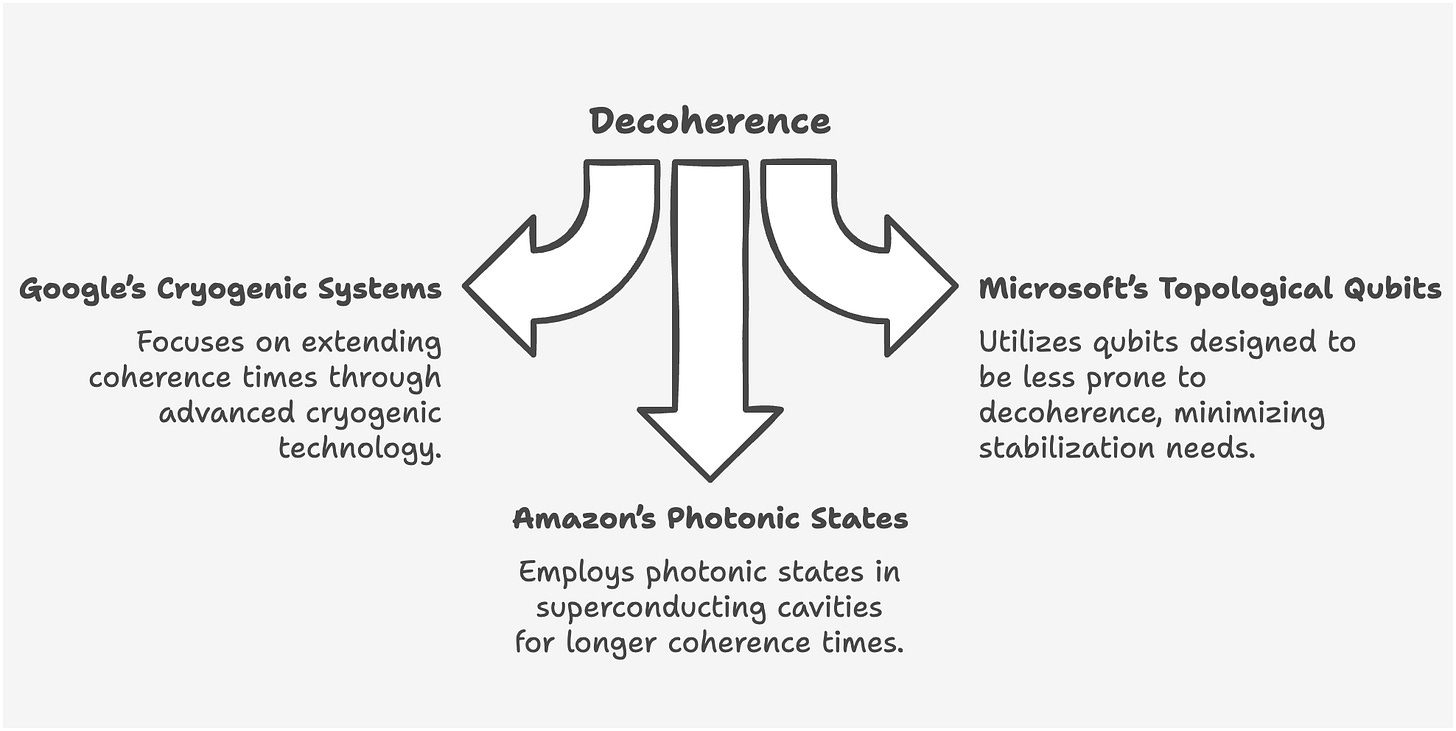

Decoherence

Decoherence occurs when quantum states lose their superposition (a principle of quantum mechanics that describes how a quantum system can exist in multiple states simultaneously) due to interactions with the environment. This limits the time available for computations.

Implications for AI

These are early days for quantum computing, but soon, AI engineers should be able to leverage quantum computers for a variety of tasks.

Medium term implications (2025–2030)

Faster Optimization: Quantum computing could provide better solutions for NP-hard optimization problems, such as resource allocation, exponentially faster than classical methods, boosting efficiency of AI applications.

Quantum Machine Learning (QML): Hybrid quantum-classical systems could enable new algorithms for generative models, improving AI performance in areas like personalized medicine and climate modeling.

Energy Efficiency: Quantum systems could reduce the computational and energy costs of training large AI models, allowing us to do more and scale with growing demands.

Long term Implications (2030 onwards)

Breakthrough AI Models: Quantum neural networks and quantum transformers could outperform classical architectures, enabling AI to tackle complex problems like NLP and protein folding with far fewer parameters. This has huge implications for running SOTA models on edge and mobile.

New Problem Domains: Quantum-AI integration could unlock innovation in areas like drug discovery, materials science, and logistics that are curent systems cannot tackle.

There is a lot to be excited about at the intersection of AI and quantum computing. What do you think? Let me know in the comments below.