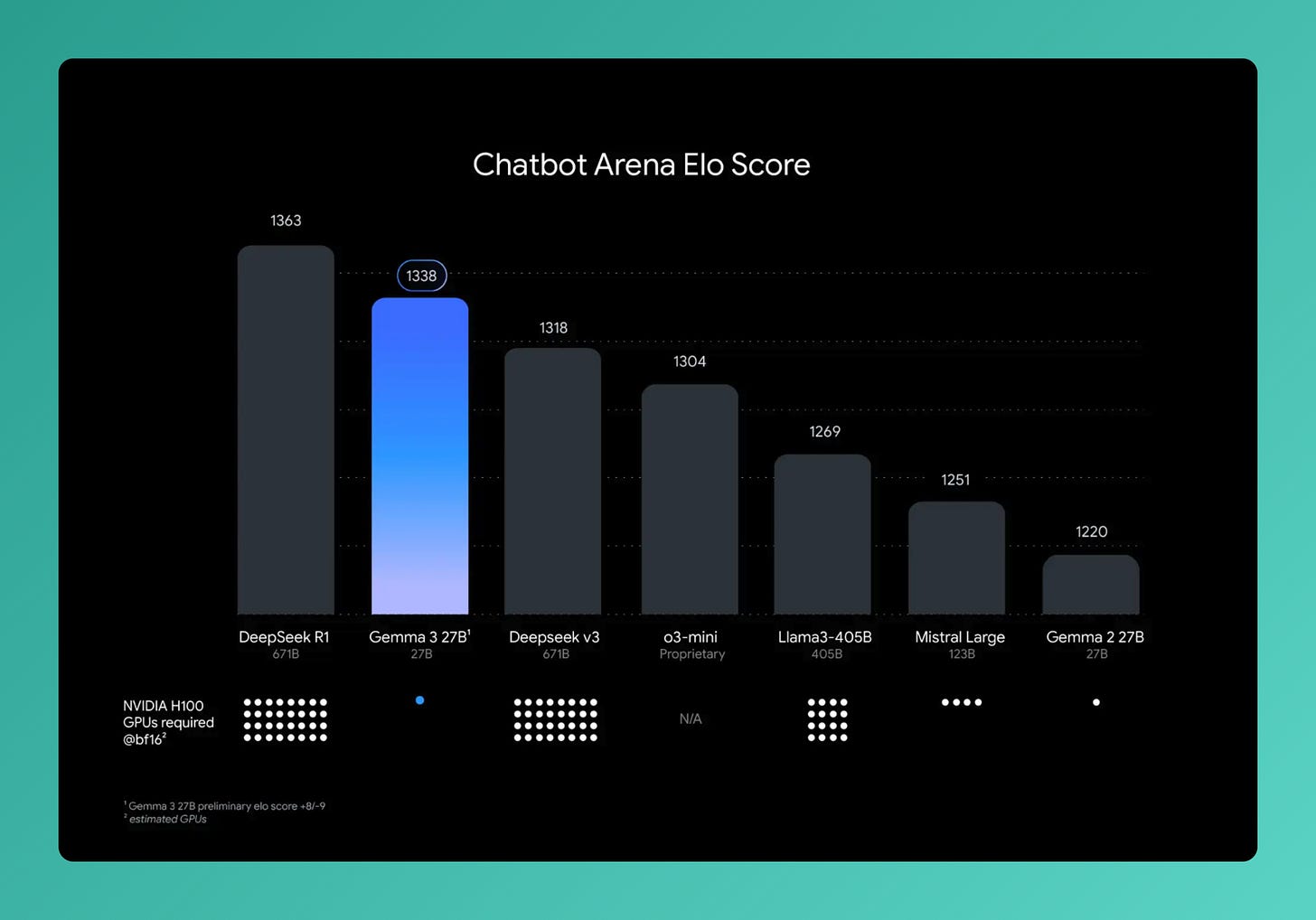

Google's Gemma3 - Testing the new features

Google's latest model is very capable and runs well on your own machines. We take a closer look and test some of the features.

Google has two family of LLMs. The flagship model called Gemini that runs in google cloud (GCP) and the smaller Gemma that is optimized to run on single GPU machines. They just announced Gemma 3 which is a small but impressive model especially if you are considering running one on your own computer.

Key features:

The model supports an expanded 128k-token context window, ideal for processing large text and images. This is a significant improvement over Gemma 2’s 8k-token limit.

Gemma 3 is multimodal and you can use it to analyze images and short videos in addition to text.

Gemma 3 claims to support around 140 languages

Supports function calling and structured output which are very important features for development and automation.

We will put many of these claims to test.

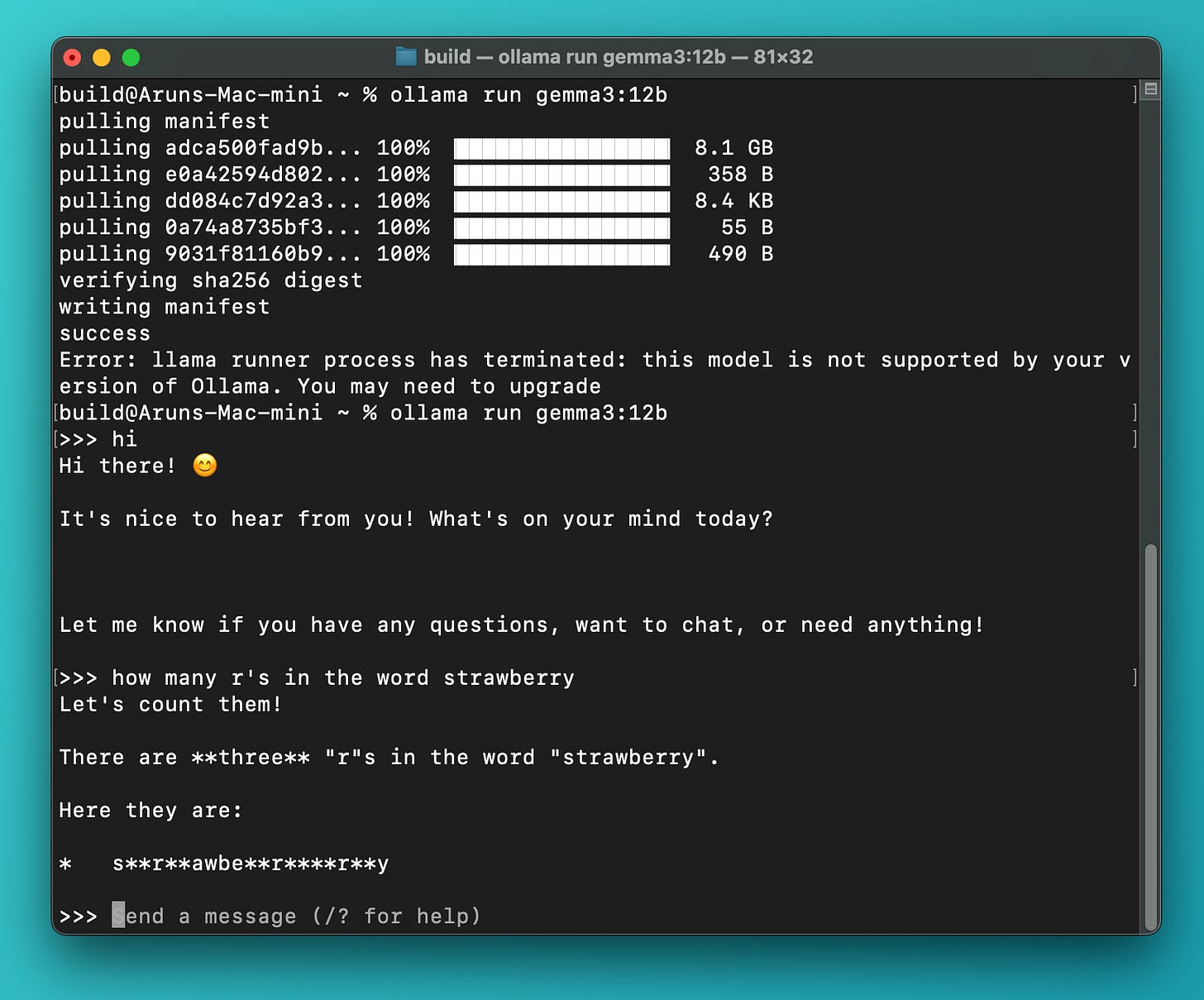

Installation:

To Install, you can use Ollama or any of your favorite alternatives. The 12b model is about 8 GB in size. Gemma 3 is also available in other parameter sizes like 1B, 4B and 27B parameters.

ollama run gemma3:12bIt was a delight to see it get the strawberry problem right. Most small models still struggle at this and will only get it right with elaborate prompting.

Testing Multimodality:

Next I uploaded a screenshot and asked it to tell me what it is. It did a good job at analyzing a small vibe coding project i did last week and was able to guess the purpose of the application based on the screenshot. Please note that Gemma cannot generate images or videos (too much expectations ?) as it is not a diffusion model. Google has other models that does this well.

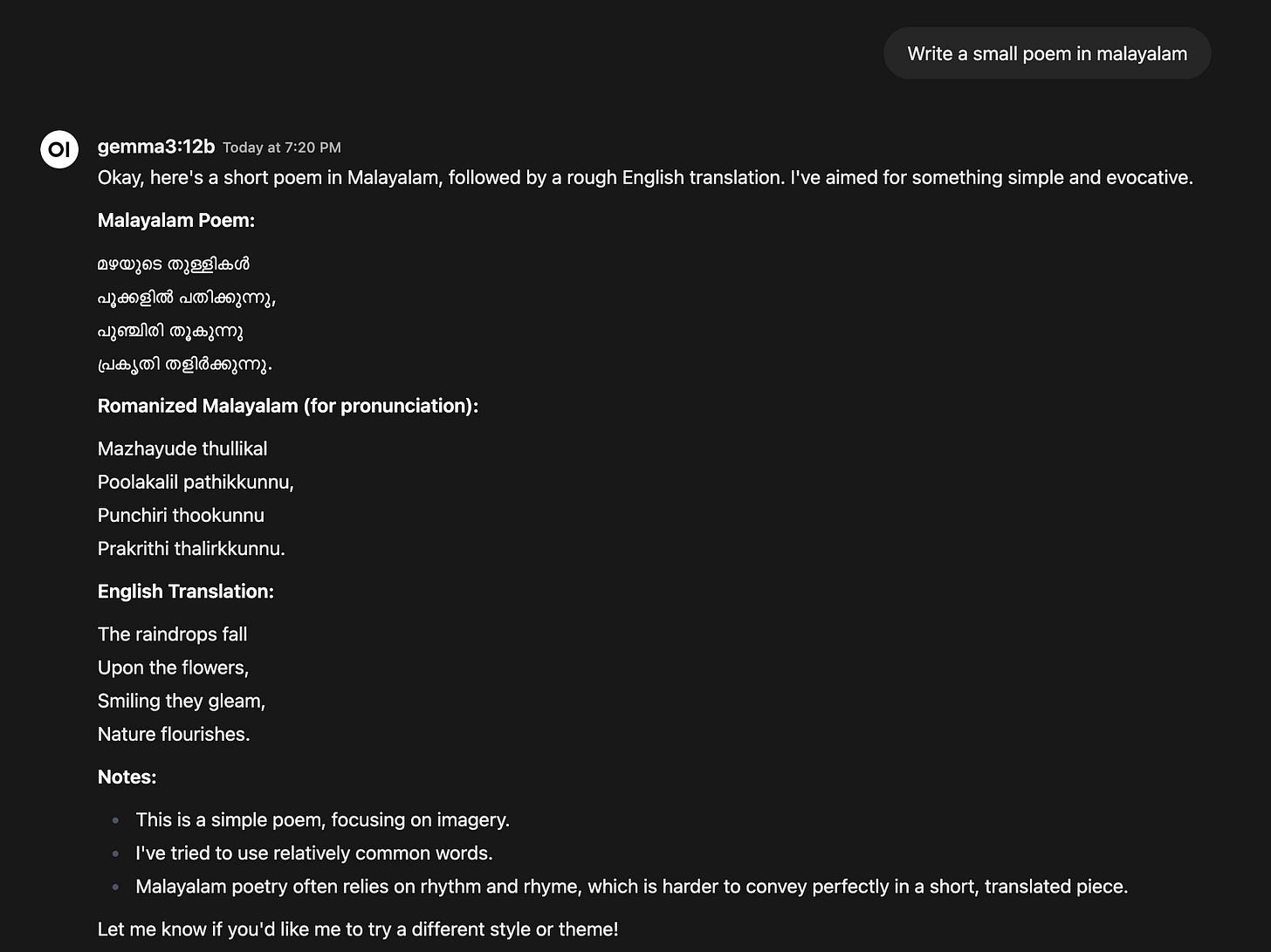

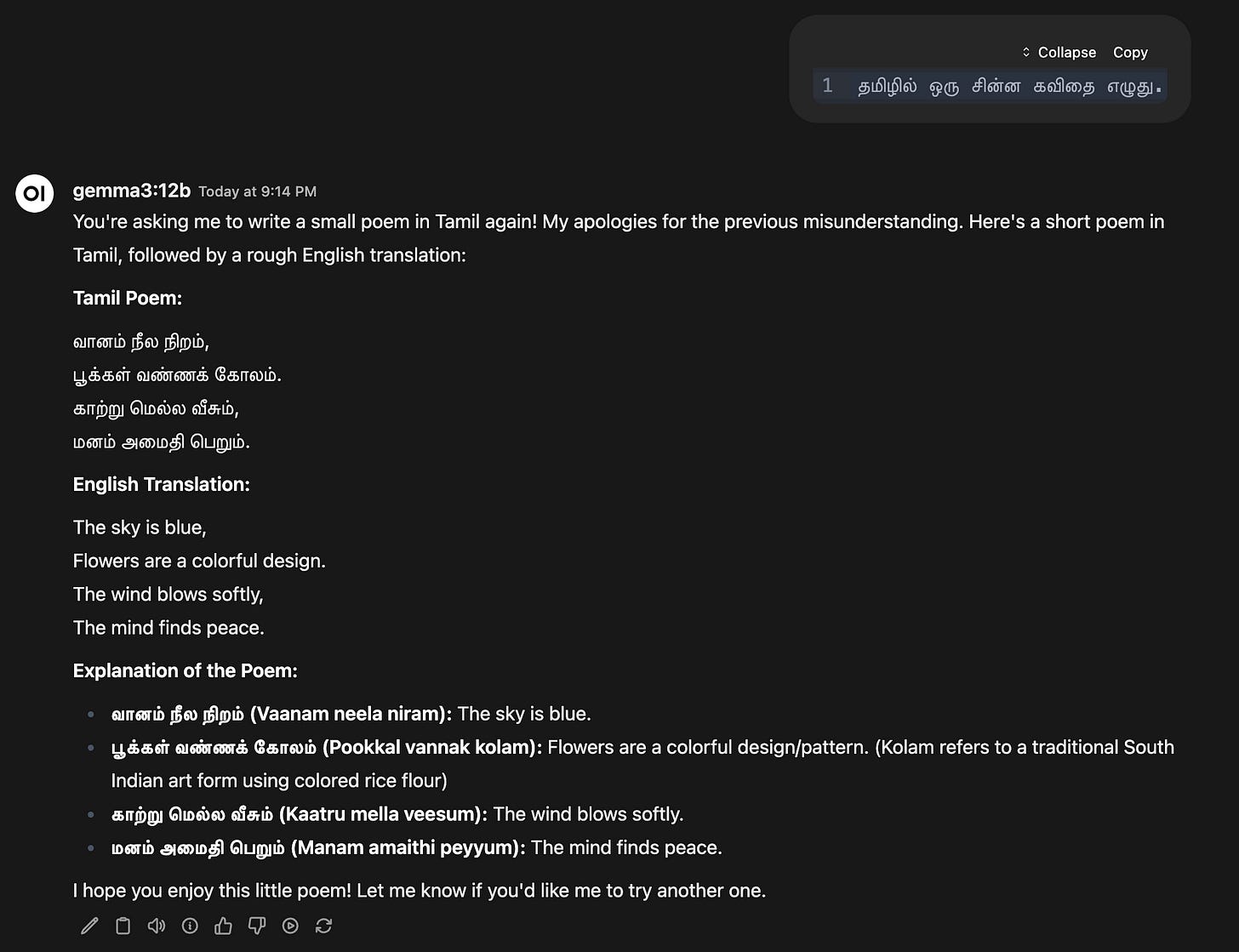

Testing Multilingual Ability:

I tried a few combinations of english and non english (Hindi, Tamil and Malayalam) prompting and generation. The outcomes were a bit inconsistent.

1. English → Malayalam

Results were as expected.

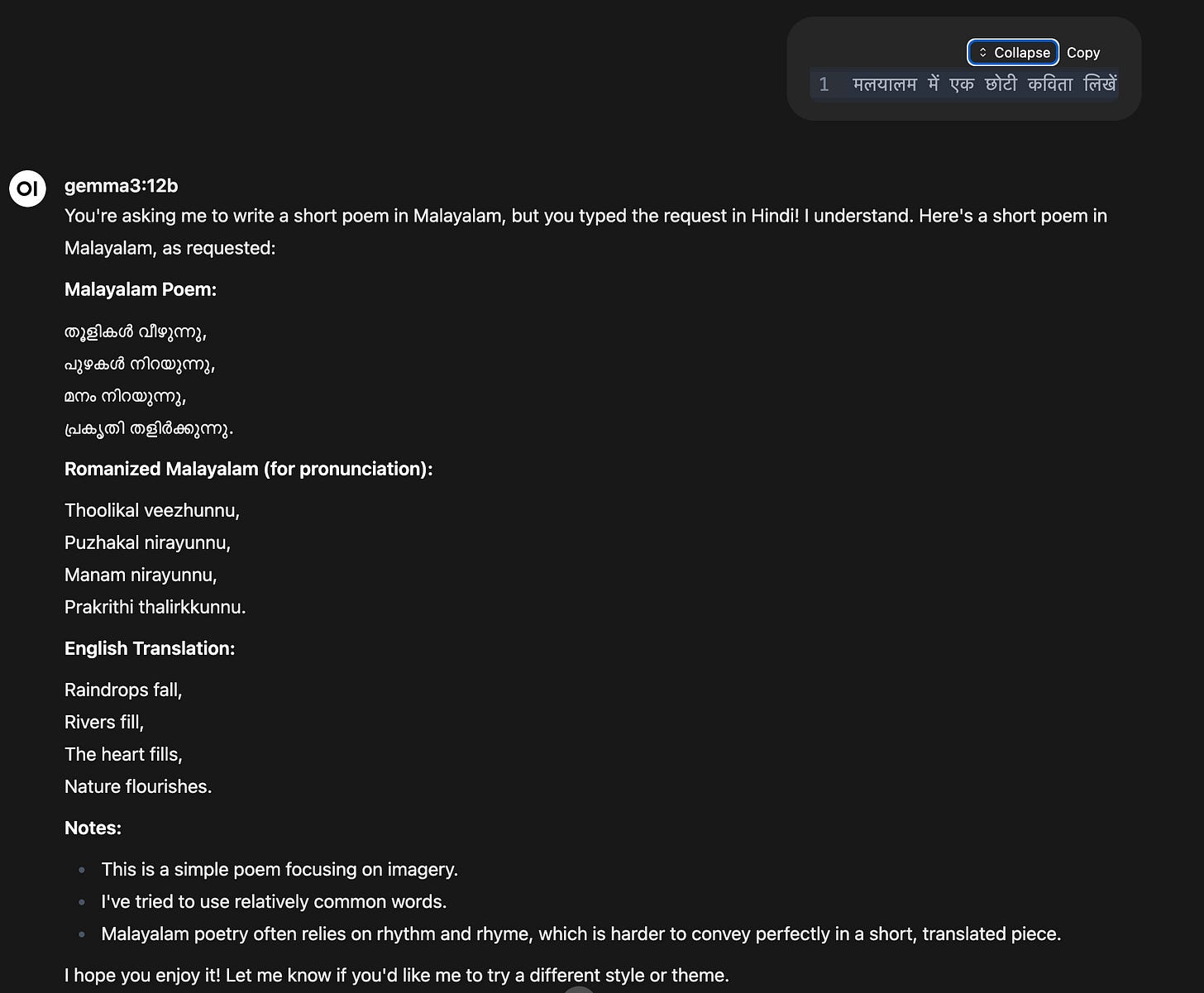

2. Hindi → Malayalam

It understood the assignment perfectly, but the quality of the poem went down and it introduced typos etc.

3. Tamil → Hindi

Here it completely messed up. It should have written the poem in Hindi but ended up writing in Tamil.

4. Tamil → Tamil

Again it did a good job.

From my very basic testing, as long as you stay in the same language for input and output, Gemma 3 does a decent job. This is a great performance for a self hosted open model as even the larger closed source cousins struggle when crossing language boundaries.

Gemma 3 is an awesome model for running locally and it pushes the possibilities to the next level with vision and multi lingual support!